Perception for Agricultural Robotics*

I. Introduction

and proprioceptive perception, related to the internal state of the robot (orientation, speed, acceleration, etc.).

II. Sensors Used by Agricultural Robots

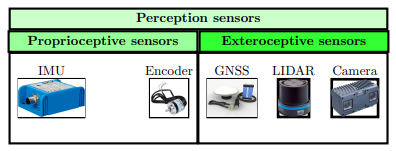

As shown in Figure 1 below, the sensors used by agricultural robots can be grouped into two main categories: proprioceptive sensors, which measure the internal state of the robot, and extéroceptive sensors, which measure elements of its environment.

Figure 1: Sensors Used by Agricultural Robots

- The inertial measurement unit (IMU: Inertial Motion Unit): it is a unit capable of providing information such as the linear and angular acceleration of the robot.

- Encoders: these are sensors that measure the rotation of the wheels.

As for extéroceptive sensors, we notably find:

- GNSS (Global Navigation Satellite System), for example, a GPS (Global Positioning System): it uses satellite signals to determine the robot's position relative to a fixed terrestrial reference. Its accuracy can be affected by the environment (e.g., obstruction by the canopy). Under normal conditions, it offers metre-level accuracy. When combined with a terrestrial reference station, the accuracy becomes centimetric: this is known as RTK GPS (Real Time Kinematic).

- The camera: this sensor provides an image of the robot's environment. RGB-D cameras (Red Green

- Blue - Distance) provide both a colour image and the distance of objects relative to the robot. They are the most common in agriculture. Their main disadvantage remains their low robustness against disruptive elements such as rain, snow, or dust, which can obstruct the lens.

- LIDAR (Light Detection And Ranging): unlike the camera, it is robust to degraded weather conditions and dust. By emitting and receiving light beams, it generates a point cloud representing the environment around the robot, thus enabling its mapping. However, it remains about five times more expensive than a camera.

III. Proprioceptive Perception Approaches

To improve accuracy, sensor information is combined, allowing for more reliable localisation. Among the most commonly used algorithms for this type of fusion is the Kalman filter.

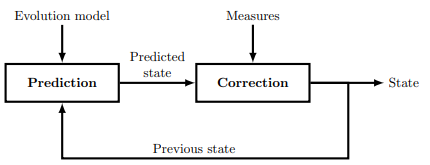

Figure 2: Principle of a Kalman Filter

It relies on two main steps. The first is prediction, which estimates the robot's future state from its previous state and its evolution model. The second is correction, during which sensor measurements are used to adjust and refine the prediction made, thus providing a more accurate estimate of the robot's actual state.

- Prediction: This step uses the robot's previous state and the evolution model to predict its future state.

- Correction: In this step, the predicted state is compared to the measurements obtained by the sensors, and the state estimation is adjusted, allowing for a more precise localisation of the robot.

IV. Extéroceptive Perception Approaches

These algorithms rely on extéroceptive sensors which, in addition to contributing to proprioceptive perception (as in the case of GNSS RTK localisation), are used to represent the environment.

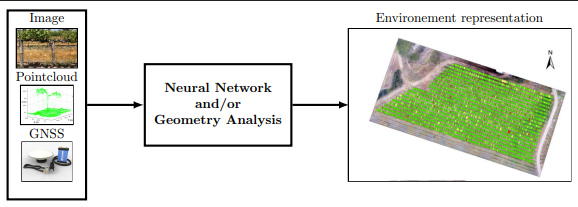

As shown in Figure 3 below, extéroceptive perception for an agricultural robot primarily aims to model the robot's environment and position it within that environment.

Figure 3: Principle of Extéroceptive Perception

Using approaches based on artificial intelligence or geometric analysis to model the useful environment of the robot from images, point clouds, and/or its GNSS position.

- Artificial intelligence-based approach: a neural network analyses information from the sensors (images, point clouds), identifies and classifies the different elements present in the environment (plants of interest, weeds, obstacles, humans, etc.), and then generates a structured map including the positions of each of these elements as well as that of the robot.

- Geometric analysis-based approach: the output is similar, namely a map of the different elements of the environment and the robot. The difference lies in the method: it relies on the geometric analysis of the point clouds provided by the sensors. For example, certain geometric shapes in the point cloud can be detected and assigned to a specific category (plant of interest, weed, etc.).

V. Conclusion

In conclusion, an agricultural robot uses proprioceptive and extéroceptive sensors to perceive its environment and accurately locate itself. Among the most common are GNSS (RTK GPS), RGB-D cameras, and LIDAR. When combined with suitable algorithms, these sensors provide the robot with crucial information for navigation and autonomous decision-making.

*: The technical information presented in this article is provided for informational purposes only. It does not replace the official manuals of the manufacturers. Before any installation, handling, or use, please consult the product documentation and follow the safety instructions. The site Torque.works cannot be held responsible for inappropriate use or incorrect interpretation of the information provided.